Lightly Pretagging

The LightlyOne Worker supports the use of pre-trained models to tag the raw data before sampling. We call this pretagging. For now, we offer a pre-trained model for object detection optimized for autonomous driving.

Using a pre-trained model does not resolve the need for high-quality human annotations. However, we can use the model predictions to get some idea of the underlying distribution within the dataset.

The model is capable of detecting the following core classes:

- bicycle

- bus

- car

- motorcycle

- person

- train

- truck

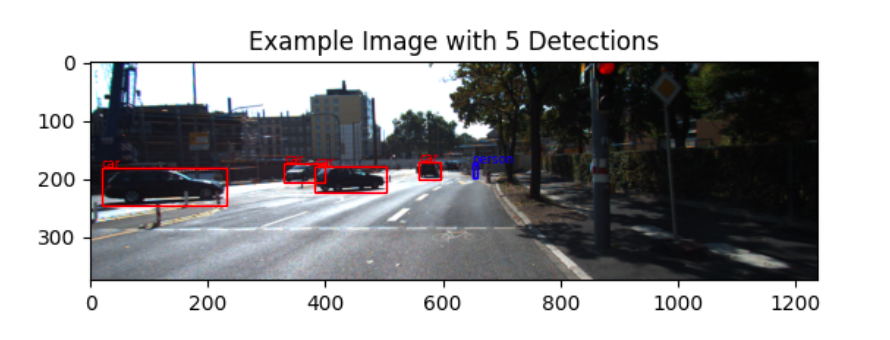

The plot shows the detected bounding boxes from the pretagging model overlayed on the image.

The pretagging model is based on a FasterRCNN model with a ResNet-50 backbone and has been trained on a dataset consisting of ~100k images.

Selection

You can enable pretagging when scheduling a new run by setting pretagging to True in the worker configuration. The pretagging predictions can then be used in the selection configuration by setting lightly_pretagging as task name. The example below shows how to use pretagging to balance your data based on a target distribution of the detected objects.

from lightly.api import ApiWorkflowClient

from lightly.openapi_generated.swagger_client import DatasetType

# Create the LightlyOne client to connect to the API.

client = ApiWorkflowClient(token="MY_LIGHTLY_TOKEN", dataset_id="MY_DATASET_ID")

scheduled_run_id = client.schedule_compute_worker_run(

worker_config={

"pretagging": True,

},

selection_config={

"n_samples": 100,

"strategies": [

{

"input": {

"type": "PREDICTIONS",

"task": "lightly_pretagging",

"name": "CLASS_DISTRIBUTION",

},

"strategy": {

"type": "BALANCE",

"target": {

"bicycle": 0.1,

"bus": 0.1,

"car": 0.5,

"motorcycle": 0.1,

"person": 0.1,

"train": 0.05,

"truck": 0.05,

},

},

}

],

},

)Object Diversity

Pretagging can also be used to run LightlyOne with object diversity. This can be enabled by using lightly_pretagging as the task name in the selection config. Here we show an example of how to select images with diverse objects:

scheduled_run_id = client.schedule_compute_worker_run(

worker_config={

"pretagging": True,

},

selection_config={

"n_samples": 100,

"strategies": [

{

"input": {

"type": "EMBEDDINGS",

"task": "lightly_pretagging",

},

"strategy": {

"type": "DIVERSITY",

},

}

],

},

)Predictions Upload

For every run with pretagging enabled, LightlyOne also uploads the pretagging predictions to the Lightly datasource and saves them in the .lightly/predictions/lightly_pretagging directory. Each prediction file contains object detections for one image:

// boxes have format x1, y1, x2, y2

[

{

"filename": "image_1.png",

"boxes": [

[

0.869,

0.153,

0.885,

0.197

],

[

0.231,

0.175,

0.291,

0.202

]

],

"labels": [

"person",

"car"

],

"scores": [

0.9845203757286072,

0.9323102831840515

]

},

...

]Report

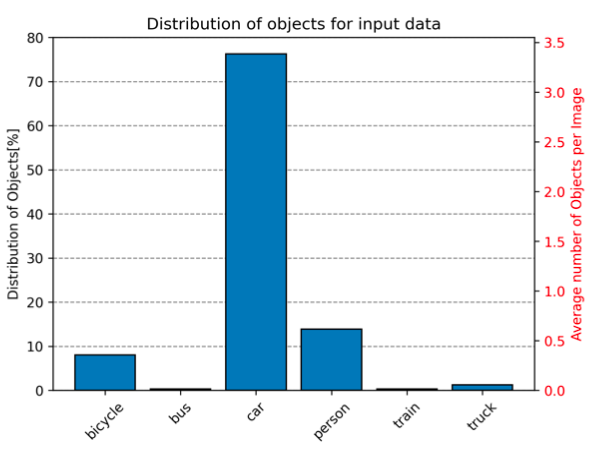

Pretagging results are visualized in the report. The report shows the object class histograms before and after the selection process. Below is an example of a histogram for the input data before filtering:

Histogram plot of the pretagging model for the input data (full dataset). The plot shows the distribution of the various detected classes and the average number of objects per image.

Updated 7 months ago