Dagster

Data Pre-processing Pipeline on AWS with Dagster

Data collection and pre-processing pipelines have become increasingly automated in recent years. LightlyOne's ability to integrate with Dagster makes it a great building block for your machine learning pipeline.

This guide shows how to write a simple automated data pre-processing pipeline that performs the following steps:

- Download a random video from Pexels.

- Upload the video to an S3 bucket.

- Spin up an EC2 instance and start the LightlyOne Worker waiting for a job to process.

- Schedule a run for the LightlyOne Worker and wait till it has finished.

- Shutdown the instance.

Here, the first two steps simulate a data collection process. For this pipeline, you will use Dagster, an open-source data orchestrator for machine learning. It enables building, deploying, and debugging data processing pipelines. Click here to learn more.

The structure of the tutorial is as follows:

Prerequisites

Setting up the EC2 Instance

The first step is to set up the EC2 instance. For the purposes of this tutorial, it’s recommended to pick an instance with a GPU (like the g4dn.xlarge) and the “Deep Learning AMI (Ubuntu 18.04) Version 48.0” AMI. See this guide to get started. Connect to the instance.

Next, you have to install LightlyOne. Please follow the instructions. Last, test if the installation on your cloud machine was successful by running the sanity check:

docker run --shm-size="1024m" --rm -it lightly/worker:latest sanity_check=TrueTo run the LightlyOne Worker remotely, create a new file/home/ubuntu/run.sh starting the docker container:

docker run --shm-size="1024m" --gpus all --rm -it \

-e LIGHTLY_TOKEN="MY_LIGHTLY_TOKEN" \

-e LIGHTLY_WORKER_ID="MY_WORKER_ID" \

lightly/worker:latestGrant Access to instance from SSM

In order to send commands remotely to your instance, you need to grant AWS Systems Manager access to your EC2 Instance. You can do that by following the AWS official documentation page.

Setting up the S3 Bucket

If you don’t have an S3 bucket already, follow the AWS official instructions to create one. You will need two buckets, an Input and a Lightly bucket in order to work with LightlyOne. Then you have to configure your datasource with delegated access in a way that LightlyOne can access your data.

Local installation of code

You need to clone the accompanying GitHub repository to your local machine (e.g. your development notebook), where you will launch your pipeline that remotely controls your instance.

git clone https://github.com/lightly-ai/dagster-lightly-example.git

pip install -r requirements.txtSetup of AWS CLI

If you haven't done it yet, install AWS CLI on your local machine. You can find the tutorial on installing the AWS CLI on your system here. Test if the installation went out correctly with the following:

which aws

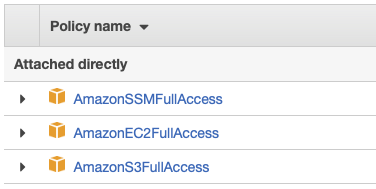

aws --versionIn order to make your local machine control your automated pipeline, you need your local AWS CLI to have the proper permissions. You may need to perform a quick setup. In particular, be sure to configure it with an AWS IAM User having the following Amazon AWS managed policies:

- AmazonEC2FullAccess: to turn on and off your EC2 machine.

- AmazonS3FullAccess: to upload your data on the S3 bucket.

- AmazonSSMFullAccess: to remotely run the script.

This should be your resulting AWS CLI user policy list:

If you followed the steps in Grant Access to instance from SSM correctly, you should be now able to reach your instance by SSM. Check if it shows in the SSM list with the following command. Be aware that it can take some hours for an instance to be added to the SSM list.

aws ssm describe-instance-informationThe output should look similar to this:

{

"InstanceInformationList": [

{

"InstanceId": "YOUR-INSTANCE-ID",

"PingStatus": ...,

[...]

}

]

}Dagster pipeline for processing raw data

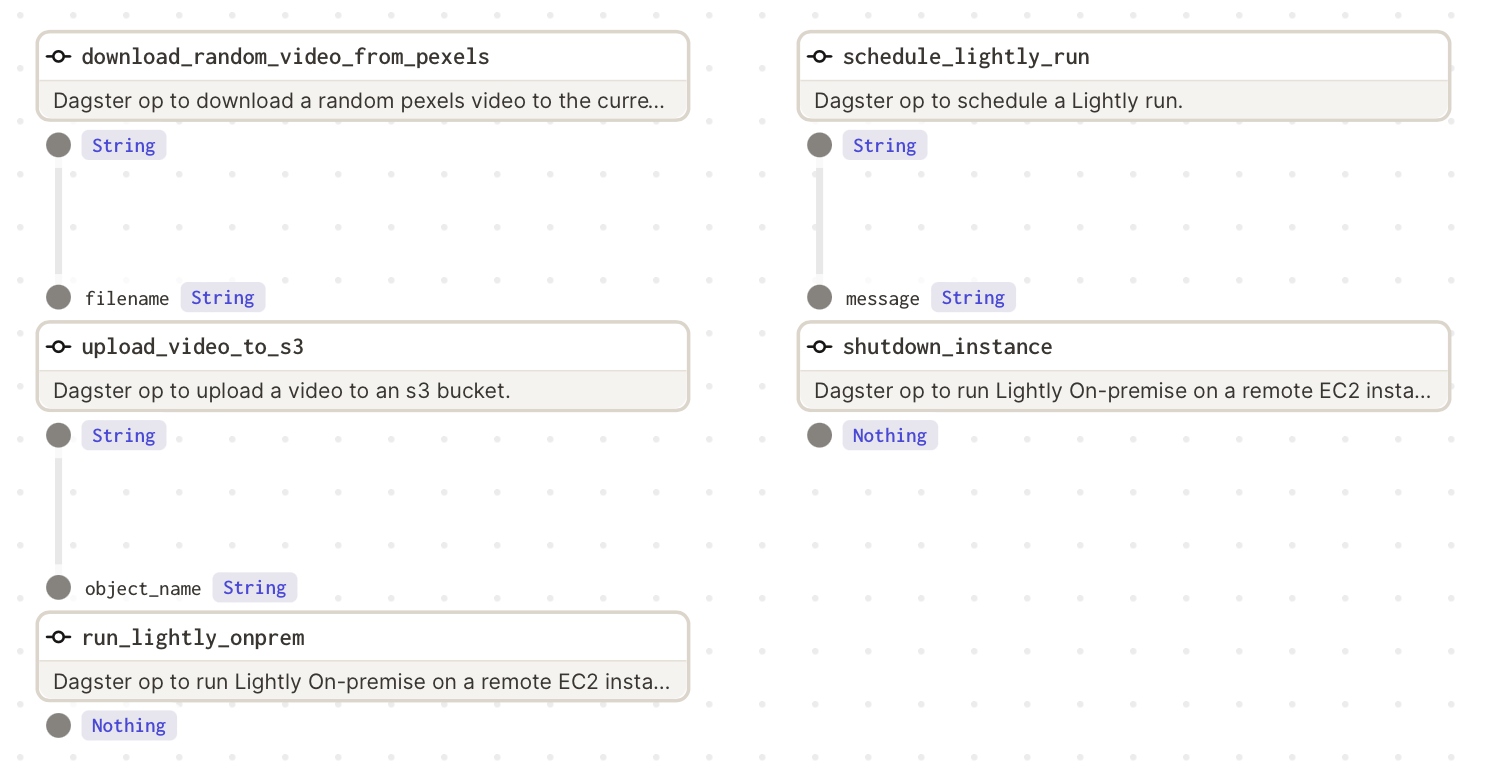

Dagster pipelines consist of several ops which can be chained one after each other in a job. You can see here the target job schema from dagit overview:

Dagit pipeline breakdown

You can see that the pipeline is made of five different methods. The steps (1) and (2) are called in parallel. Then the step (2.1) will shut down the instance and close the pipeline when the run will end.

- Download the random video

- Upload it to S3

- Turn on the instance (if off), and launch LightlyOne Worker

- Schedule a LightlyOne Worker run which sends the selection strategy to the worker

- Shutdown the instance at the end of the run

Pipeline breakdown

In the next paragraphs you will find a breakdown of each python file of this repository:

.

├── aws_example_job.py

├── ops

│ ├── aws

│ │ ├── ec2.py

│ │ └── s3.py

│ ├── lightly_run.py

│ └── pexels.pyops/pexels.py

Contains download_random_video_from_pexels op which downloads a random video from Pexels and saves it in the current working directory. Don’t forget to set the PEXELS_API_KEY. You can find it here after setting up a free account.

ops/aws/s3.py

Contains upload_video_to_s3 op which uploads the downloaded video from Pexels to your Input bucket. Don’t forget to set the S3_INPUT_BUCKET parameter and the S3_REGION.

ops/aws/ec2.py

Contains the run_lightly_onprem op that turns on your EC2 machine and starts your LightlyOne worker using your run.sh script saved in the /home/ubuntu/ folder of your EC2 instance, and the shutdown_instance op that turns off your machine. Don’t forget to set the EC2_INSTANCE_ID parameter and the EC2_REGION. You can find your instance id in the AWS EC2 page

ops/lightly_run.py

Contains the run_lightly_onprem op that schedules a run and monitors it via the LightlyOne APIs. The selection_config will make the LightlyOne Worker choose 10% of the frames from the initial video that are as diverse as possible. This is done using the embeddings, which are automatically created during the run. Don’t forget to set all the required parameters.

aws_example_job.py

Puts everything together in a dagster job.

Running and visualizing the Pipeline

Dagit allows to visualize pipelines in a web interface. The following command shows the above pipeline in your browser under 127.0.0.1:3000:

dagit -f aws_example_job.pyYou can execute the pipeline easily:

dagster pipeline execute -f aws_example_job.pyResults

You can see the selection results directly in the LightlyOne Platform and export them as full images, filenames, or as a labeling task.

Updated 10 months ago